The World Ethical Data Foundation (WEDF), a non-profit global group comprising AI experts and data scientists, has recently unveiled a groundbreaking voluntary framework aimed at ensuring the safe development of artificial intelligence products. With an impressive membership of 25,000 individuals, including staff from tech giants like Meta, Google, and Samsung, the foundation seeks to address the ethical challenges and potential risks associated with AI technology.

The framework, presented as an open letter and endorsed by hundreds of signatories from the AI community, features a comprehensive checklist of 84 questions. These questions are intended to guide developers at the outset of their AI projects, encouraging them to consider various ethical implications and potential pitfalls. By initiating a transparent and collaborative approach, the WEDF aims to foster responsible AI development and implementation.

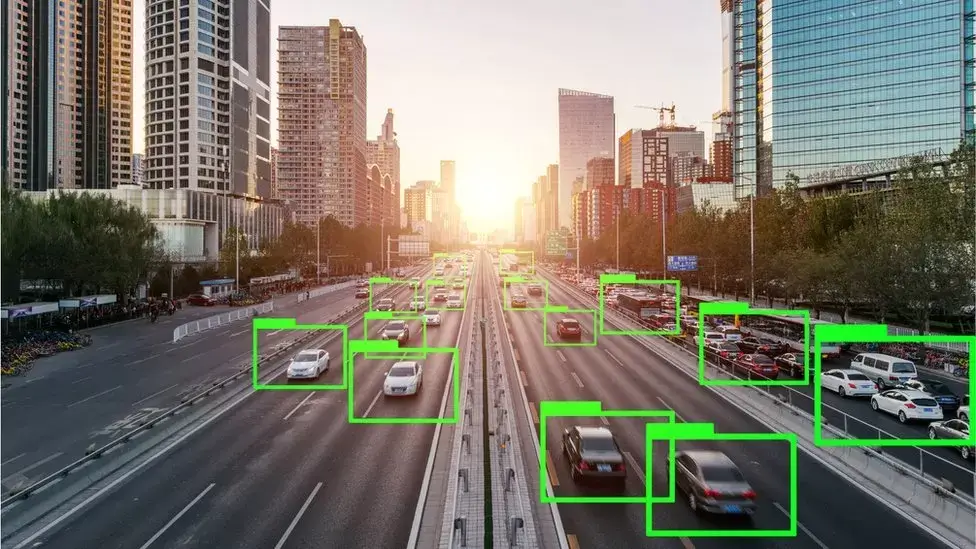

AI, with its remarkable ability to emulate human-like actions and responses, relies on vast amounts of data and algorithms. These algorithms, akin to sets of rules, enable computers to make predictions, solve complex problems, and even learn from mistakes. However, the use of AI also introduces challenges such as bias, privacy concerns, and potential legal implications, which the foundation seeks to address through its framework.

Among the thought-provoking questions posed by the WEDF are those relating to bias mitigation and law-breaking consequences. Developers are encouraged to explore how they can prevent AI products from incorporating bias and how they would handle situations where the AI’s output might lead to illegal activities. The framework also delves into issues surrounding data protection laws in different regions, the transparency of AI interactions with users, and the fair treatment of human workers involved in training the AI.

In a proactive move, the WEDF has extended an invitation to the public to submit their own questions. Embracing inclusivity and a diversity of perspectives, the foundation plans to consider these contributions during its upcoming annual conference. By opening up the conversation to a wider audience, the WEDF seeks to ensure that the framework remains comprehensive and adaptable to evolving ethical challenges in the AI landscape.

The need for such a framework has become increasingly apparent as AI technology progresses. Vince Lynch, founder of IV.AI and an advisor to the WEDF board, likens the current stage of AI development to the “Wild West.” While exciting advancements have been made, concerns over intellectual property, human rights, and ethical considerations have come to the forefront.

Governments and policymakers are also taking note of the potential risks associated with AI. In response, the UK’s Prime Minister, Rishi Sunak, has appointed tech entrepreneur and AI investor Ian Hogarth to lead an AI task force. Hogarth’s focus on understanding the risks associated with cutting-edge AI systems aligns with the WEDF’s mission to hold developers accountable for their AI products’ ethical implications.

Various initiatives worldwide aim to ensure responsible AI development. The European Union, led by Competition Commissioner Margarethe Vestager, is pursuing the creation of a voluntary code of conduct for AI development in collaboration with the US government. This code would encourage companies involved in AI to adhere to a set of ethical standards, though not legally binding.

In the industry, companies like Willo, a Glasgow-based recruitment platform, have been attuned to the ethical considerations of AI. Co-founders Andrew Wood and Euan Cameron emphasize the importance of not allowing AI to make decisions that should remain human-driven. Their dedication to transparency aligns with the WEDF’s framework, as they believe it is crucial for users to know when they are interacting with AI-generated content.

The release of the WEDF’s voluntary framework marks a significant step towards a safer, more responsible AI landscape. By encouraging developers to proactively address ethical concerns and consider potential consequences, the foundation aims to build trust in AI technology and promote its beneficial use for society as a whole. As the AI community continues to grow and evolve, such initiatives will be essential in shaping a positive future for artificial intelligence.